The field of generative AI (gen AI) is evolving rapidly, and Red Hat Enterprise Linux AI (RHEL AI) is at the forefront of this transformation. RHEL AI 1.4 introduces support for a new, powerful Granite 3.1 model with multi-language support and a larger context window. The latest version also adds capabilities for evaluating customized and trained models with Document-Knowledge bench (DK-bench), a benchmark designed to measure the knowledge a model has acquired. Finally, we’re introducing the developer preview of our new graphical UI that helps to simplify data ingestion and writing InstructLab qna.yaml.

Support for Granite 3.1 8B model

RHEL AI 1.4 adds support for the latest addition to the open source-licensed Granite model family in Granite 3.1 8B. This model adds multilingual support for inference, including English, German, Spanish, French, Japanese, Chinese and more.In addition, as a developer preview, users can customize the Granite 3.1 starter model with taxonomy/knowledge in German, Spanish, French, Portuguese and Italian. Using LAB methodology with InstructLab synthetic data generation and training, users can now more readily create, train and tune Granite 3.1 8B models to meet language-specific requirements and business needs.

Granite 3.1 8B also adds a larger context window to the model, which is the maximum amount of text or information that a model can process and assess when generating a response or understanding a query. Granite 3.1 8B has a usable window of 32k tokens (words or parts of words), which is a significant step up from the previous context size of 4k tokens. This is an extremely useful change, making it easier to produce summarization results and implement Retrieval Augmented Generation (RAG) tasks.

New Graphical User Interface for skills and knowledge contributions

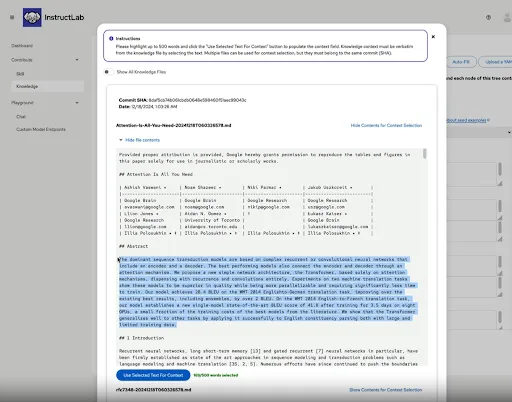

Usability is key for nearly every gen AI platform, so RHEL AI 1.4 adds a new graphic-based user interface for data ingestion and qna.yaml, the configuration file for formatting knowledge datasets and skill contributions to InstructLab. Available as a developer preview, this refreshed UI makes data ingestion and chunking with Docling easier and simplifies how end users bring their own unique skills and contributions to bear through RHEL AI. We’ll be continuing to refine this experience to make ingestion, an often tedious task, easier and more scalable for a broader set of users.

Introducing Document Knowledge-bench (DK-bench) for model evaluation

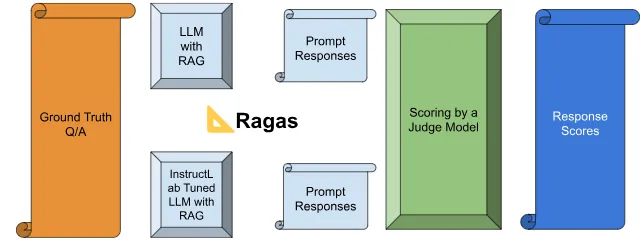

One of the key goals of RHEL AI and InstructLab is to make fine-tuning easier and more accessible, wherein a base model is customized with relevant, private enterprise data. This tends to yield an overall more effective and high-performing AI system and InstructLab (and RHEL AI) makes this possible with a relatively small sample of enterprise data paired with synthetic data and multi-phase training. That said, there needs to be an effective way to compare the un-tuned base model with the new, fine-tuned result. In RHEL AI 1.4, DK-bench now provides this capability, using the LLM-as-judge technique to quickly and efficiently compare outputs of the base model and the fine-tuned model within RHEL AI.

Availability of RHEL AI on AWS and Azure Marketplaces

AWS and Azure Marketplaces are popular places for users to deploy software from on the respective clouds. RHEL AI is now available on these marketplaces.